1 Major types of remote sensing systems and platforms

1.1 Remote sensor types

1.2 Remote sensing satellites

1.3 Manned aircraft-based imaging systems

1.4 UAS-based imaging systems

2 Airborne multispectral imaging systems

2.1 Industrial cameras

Fig. 1 A four-camera imaging system (lower left) mounted together with a hyperspectral camera (upper left), a thermal camera (upper right), and two consumer-grade RGB and NIR cameras (lower right) owned by the USDA-ARS at College Station, Texas. |

2.2 Consumer-grade cameras

Fig. 2 A two-camera imaging system developed at USDA-ARS Aerial Application Technology Research Unit in College Station, Texas, is attached to the left step of an Air Tractor 402B via a camera mount (yellow box) |

3 Airborne hyperspectral imaging sensors

4 Airborne imagery application examples

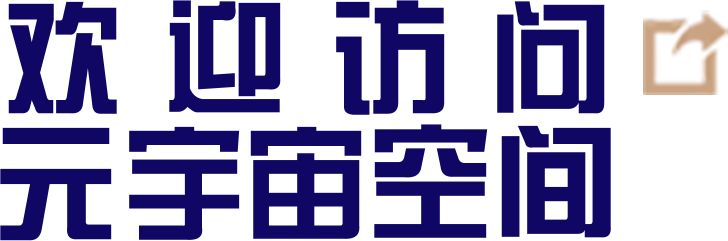

4.1 Color-infrared imagery for creating management zones and yield maps

Fig. 3 Airborne image and maps for a 6hm2 grain sorghum field in south Texas (Adapted from [69]) |

4.2 Four-band imagery for mapping cotton root rot

Fig. 4 Airborne image and maps for an 11hm2 cotton field infested with cotton root rot near San Angelo, Texas. The green areas in the prescription map were treated with fungicide (Adapted from [70]) |

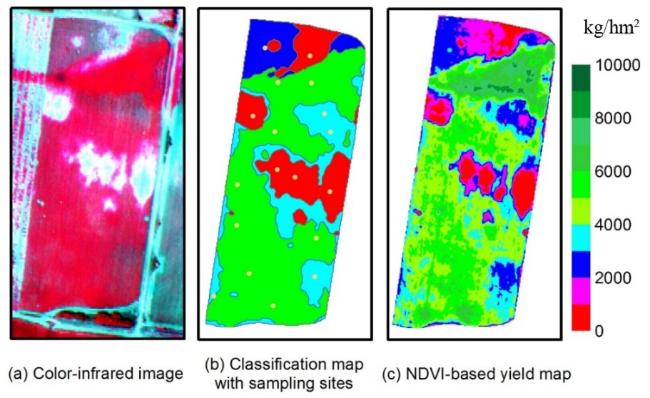

4.3 Hyperspectral imagery for mapping crop yield variability

Fig. 5 RGB color image and yield maps generated from yield monitor data and based on seven significant bands in a 102-band hyperspectral image for a 14hm2 grain sorghum field (Adapted from [69]) |

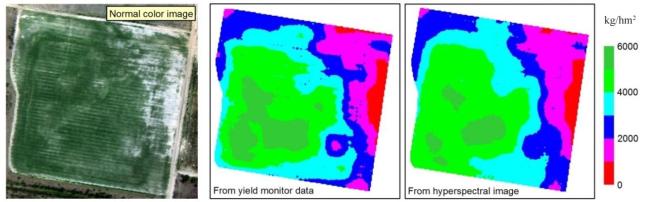

4.4 Crop monitoring with consumer-grade cameras

Fig. 6 Flight lines (left) for taking images using a pair of RGB and NIR consumer-grade cameras and a mosaicked RGB image (right) for a cropping area near College Station, Texas. |

4.5 Multispectral imagery for site-specific aerial herbicide application

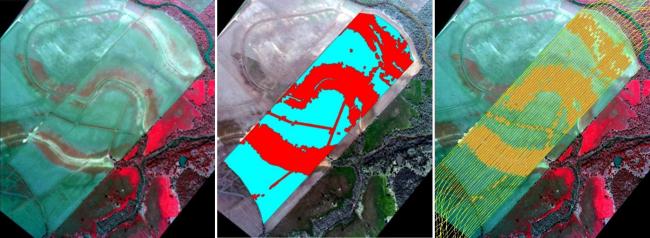

Fig. 7 Images for a 124hm2 fallow field infested with the henbit weed near College Station, Texas (Adapted from[68])(a) airborne color-infrared image (b) prescription map (c) as-applied map |