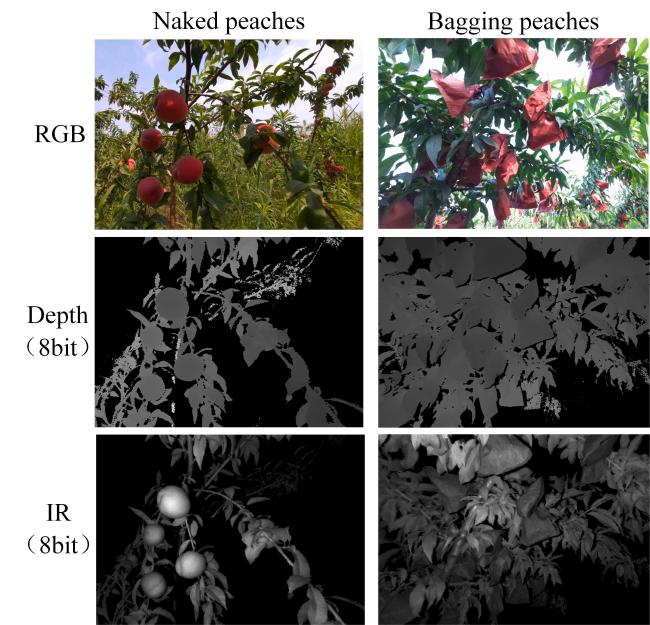

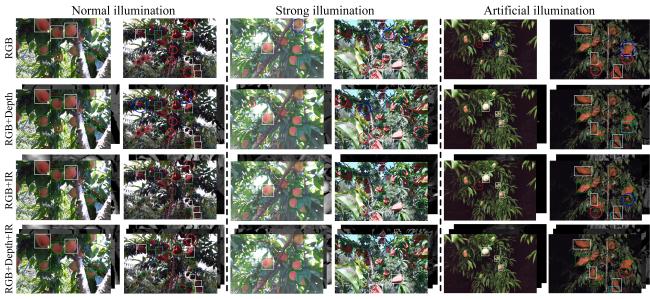

The first task of automated harvesting peach is to accurately detect peach. In-field fruit detection has been widely used in a variety of fruits. However, most of the images acquired in traditional methods were under controlled illumination, which makes them vulnerable to complex orchard environments

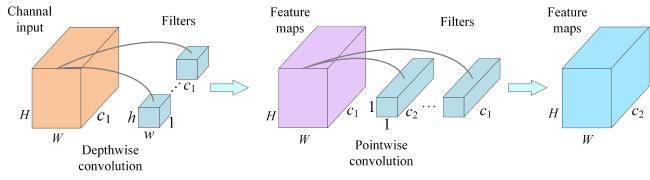

[7]. Additionally, other environmental factors, such as changing appearance and morphology size of fruits, can also impose critical effect on the detection accuracy. Compared to the traditional methods, deep learning has strong adaptability to differences within a working scene, which has become one of the most promising techniques for applications in learning image features. So progressively, deep learning algorithms have been widely used in fruit detection for agricultural robots in unstructured environments

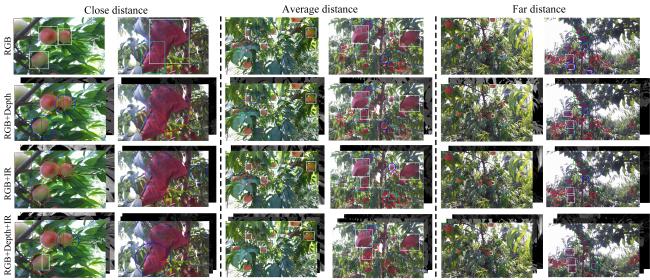

[8-10]. However, in the real fruit orchards, one of the greatest challenges in fruit detection were caused by complex orchard environment, such as changing complex background

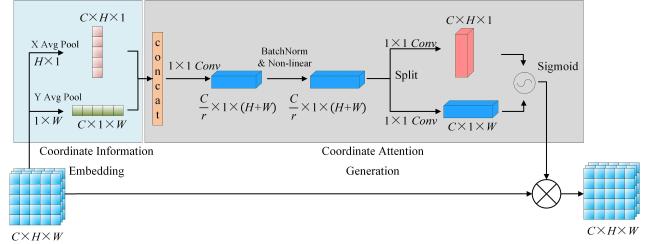

[11]. Meanwhile, the varying scales of fruit targets also caused substantial difficulties in detecting fruits, especially when the fruits were bagged in orchards. Therefore, with the vigorous development of deep learning, derived from the imitation of human vision, attention mechanism was applied to enhance the model's perception ability under complex environment. In recent years, many strategies of attention mechanism have been widely adopted for various fruit detection tasks. Li et al.

[12] introduced a deep learning target detection algorithm based on improved YOLOv4_tiny that combined an attention mechanism and the idea of multi-scale prediction to improve the recognition effect of occluded and small-target green peppers. Jiang et al.

[13] detected young apples efficiently by adding a non-local attention module and convolutional block attention model to a YOLOv4 model. Huang et al.

[14] extended the target detection algorithm by adding convolutional block attention module (CBAM) to improve the performance of citrus detection. Overall, these studies demonstrated that the use of attention mechanism could enhance the model's detection performance and adapt to the natural environment with complex backgrounds.