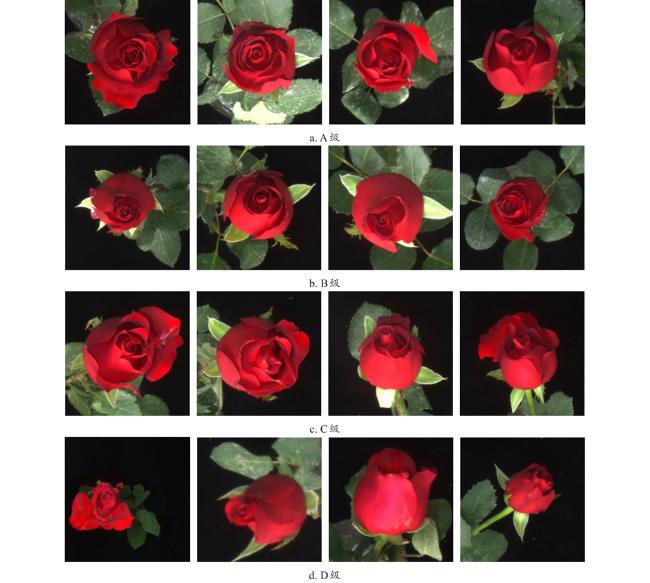

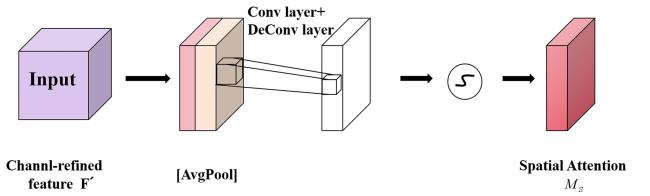

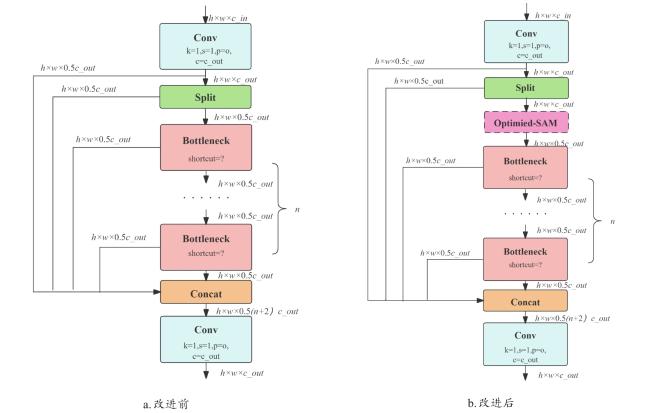

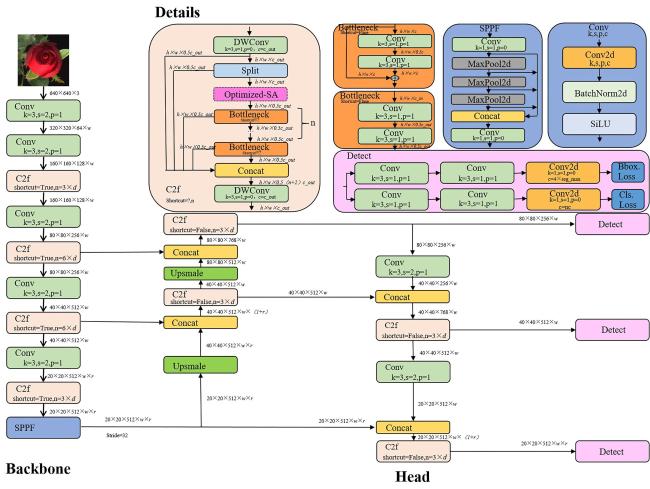

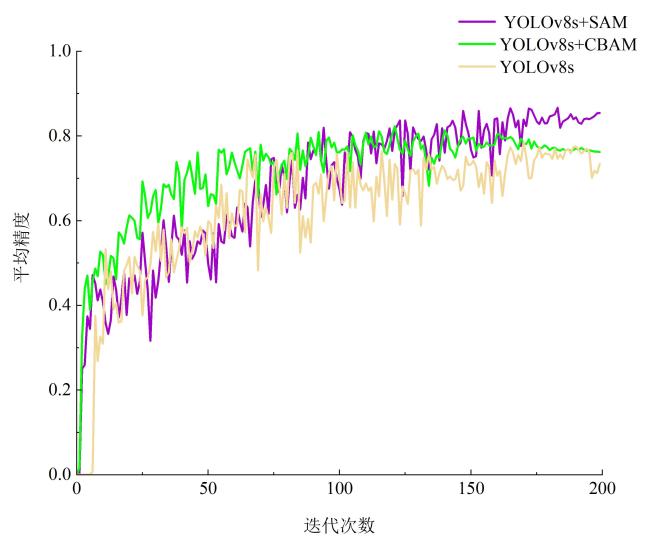

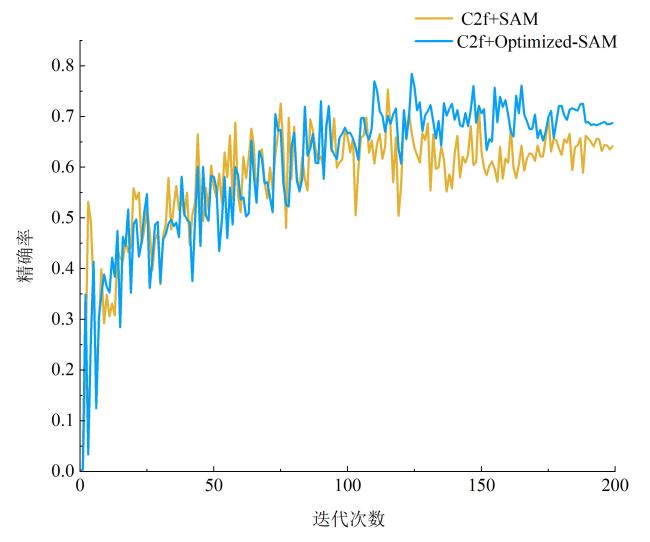

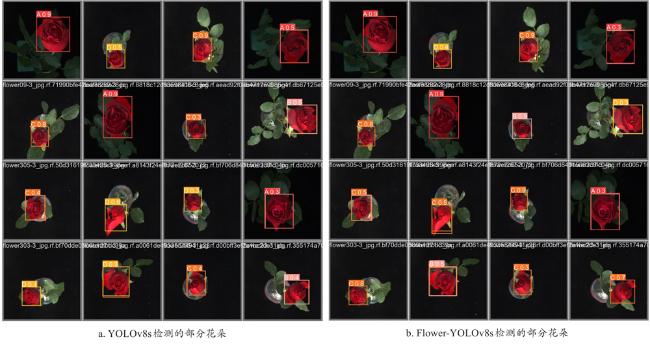

[Objective] The fresh cut rose industry has shown a positive growth trend in recent years, demonstrating sustained development. Considering the current fresh cut roses grading process relies on simple manual grading, which results in low efficiency and accuracy, a new model named Flower-YOLOv8s was proposed for grading detection of fresh cut roses. [Methods] The flower head of a single rose against a uniform background was selected as the primary detection target. Subsequently, fresh cut roses were categorized into four distinct grades: A, B, C, and D. These grades were determined based on factors such as color, size, and freshness, ensuring a comprehensive and objective grading system. A novel dataset contenting 778 images was specifically tailored for rose fresh-cut flower grading and detection was constructed. This dataset served as the foundation for our subsequent experiments and analysis. To further enhance the performance of the YOLOv8s model, two cutting-edge attention convolutional block attention module (CBAM) and spatial attention module (SAM) were introduced separately for comparison experiments. These modules were seamlessly integrated into the backbone network of the YOLOv8s model to enhance its ability to focus on salient features and suppressing irrelevant information. Moreover, selecting and optimizing the SAM module by reducing the number of convolution kernels, incorporating a depth-separable convolution module and reducing the number of input channels to improve the module's efficiency and contribute to reducing the overall computational complexity of the model. The convolution layer (Conv) in the C2f module was replaced by the depth separable convolution (DWConv), and then combined with Optimized-SAM was introduced into the C2f structure, giving birth to the Flower-YOLOv8s model. Precision, recall and F1 score were used as evaluation indicators. [Results and Discussions] Ablation results showed that the Flower-YOLOv8s model proposed in this study, namely YOLOv8s+DWConv+Optimized-SAM, the recall rate was 95.4%, which was 3.8% higher and the average accuracy, 0.2% higher than that of YOLOv8s with DWConv alone. When compared to the baseline model YOLOv8s, the Flower-YOLOv8s model exhibited a remarkable 2.1% increase in accuracy, reaching a peak of 97.4%. Furthermore, mAP was augmented by 0.7%, demonstrating the model's superior performance across various evaluation metrics. The effectiveness of adding Optimized-SAM was proved. From the overall experimental results, the number of parameters of Flower-YOLOv8s was reduced by 2.26 M compared with the baseline model YOLOv8s, and the reasoning time was also reduced from 15.6 to 5.7 ms. Therefore, the Flower-YOLOv8s model was superior to the baseline model in terms of accuracy rate, average accuracy, number of parameters, detection time and model size. The performances of Flower-YOLOv8s network were compared with other target detection algorithms of Fast-RCNN, Faster-RCNN and first-stage target detection models of SSD, YOLOv3, YOLOv5s and YOLOv8s to verify the superiority under the same condition and the same data set. The average precision values of the Flower-YOLOv8s model proposed in this study were 2.6%, 19.4%, 6.5%, 1.7%, 1.9% and 0.7% higher than those of Fast-RCNN, Faster-RCNN, SSD, YOLOv3, YOLOv5s and YOLOv8s, respectively. Compared with YOLOv8s with higher recall rate, Flower-YOLOv8s reduced model size, inference time and parameter number by 4.5 MB, 9.9 ms and 2.26 M, respectively. Notably, the Flower-YOLOv8s model achieved these improvements while simultaneously reducing model parameters and computational complexity. [Conclusions] The Flower-YOLOv8s model not only demonstrated superior detection accuracy but also exhibited a reduction in model parameters and computational complexity. This lightweight yet powerful model is highly suitable for real-time applications, making it a promising candidate for flower grading and detection tasks in the agricultural and horticultural industries.