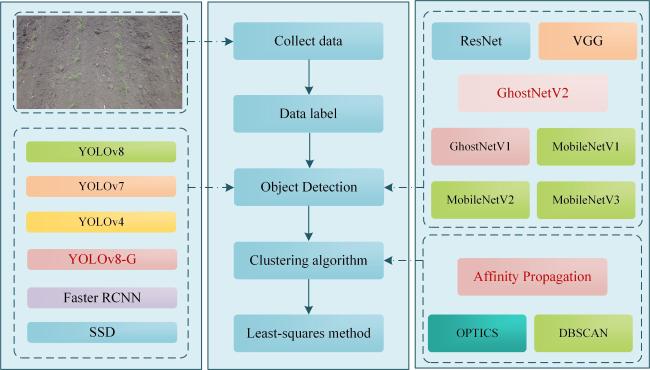

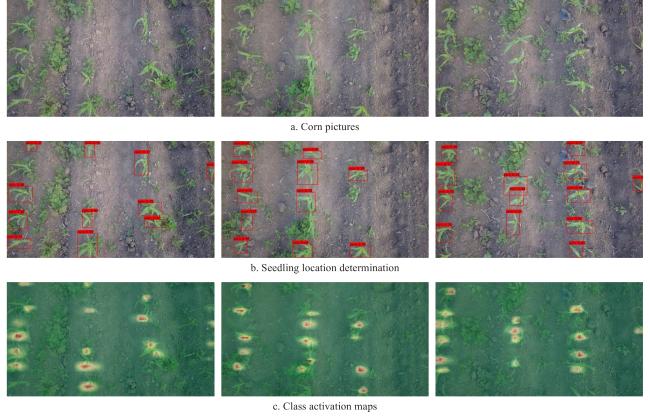

The detection of crop lines is mainly divided into two parts. First, the feature points of crops are extracted, and then the crop line is determined based on the feature points of crops. A variety of algorithms have attempted the task of crop line extraction

[4-6]. Although the traditional image processing method is simple and quick, the feature points extracted under strong light are easily disturbed, and distinguishing between crops and weeds is difficult. In recent years, the object detection algorithm has also been applied in crop row detection. Ponnambalam et al.

[7] used convolutional neural networks to extract crop row feature points and introduced an adaptive multi-ROI method to address the uneven contours of crop rows in hilly terrains, followed by linear regression for crop line detection. This highlights the effectiveness of CNN-based crop row detection and adaptive trajectory fitting in precision agriculture. Liu et al.

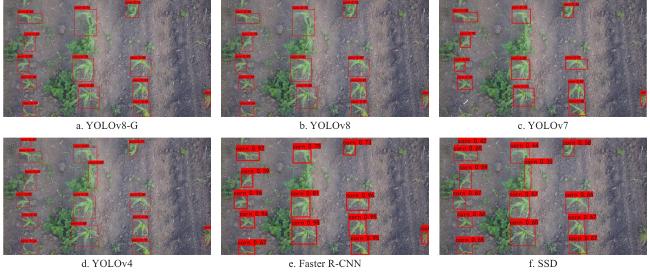

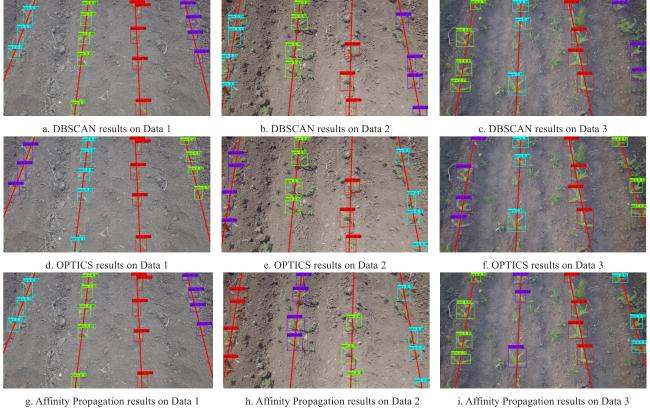

[8] used the single shot multibox detector (SSD) target detection algorithm to obtain crop feature points, and combined Mask R-CNN with the density-based spatial clustering of applications with noise (DBSCAN) clustering algorithm to accurately segment overlapping seedling leaves under heavy metal stress, and finally applied the Least Squares method to detect crop lines. De Silva et al.

[9] presented a deep convolutional encoder-decoder network for predicting crop line masks using RGB images. The algorithm outperformed baseline methods in both crop row detection and visual servoing-based navigation in realistic field scenarios. De Silva et al.

[10] proposed a deep learning-based semantic segmentation technique for identifying and extracting the position and shape of crop lines. The deep convolutional encoder-decoder network effectively predicted crop row masks from RGB images and demonstrated robustness against shadows and varying growth stages of crops. Although many mainstream target detection methods demonstrate high accuracy and can effectively distinguish between crops and weeds in complex backgrounds, they face significant limitations when directly deployed on agricultural machinery due to their large model size and high computational requirements. This constraint makes it challenging to implement these methods in embedded systems with limited processing power, which is typical for most agricultural machinery. Additionally, these models often require substantial energy consumption, which can be impractical in field operations where power efficiency is essential.