1 引 言

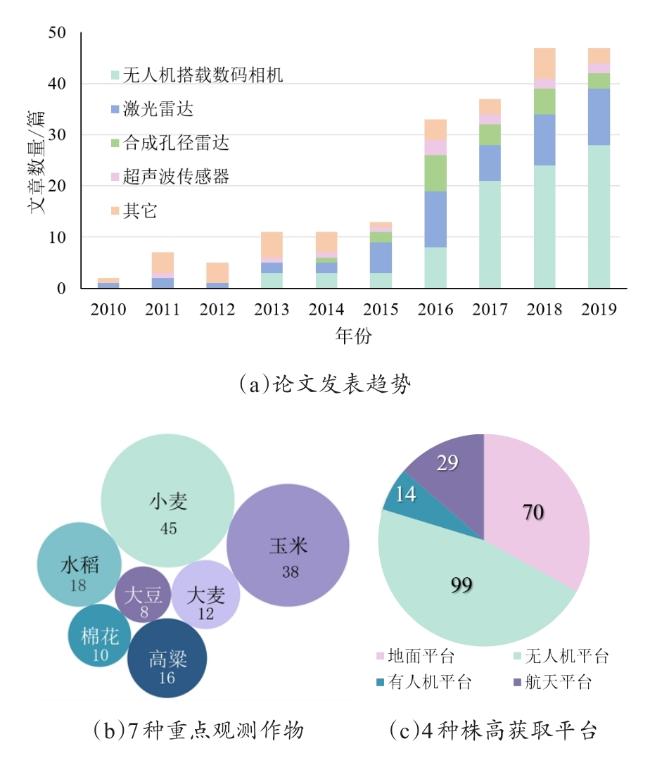

2 全球遥感技术测高研究态势

3 近地遥感技术测高的研究进展

3.1 主动式遥感测高及特点

3.2 被动式遥感测高及特点

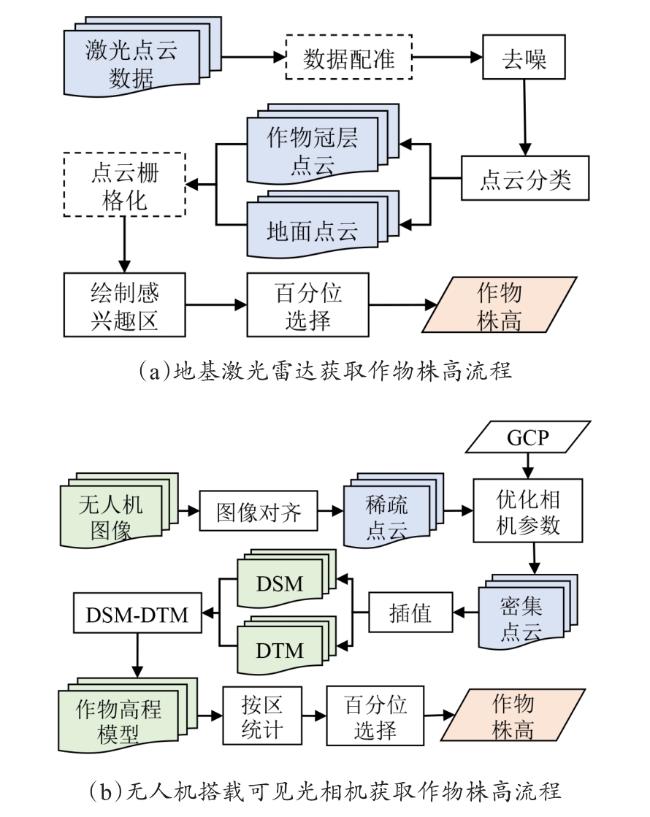

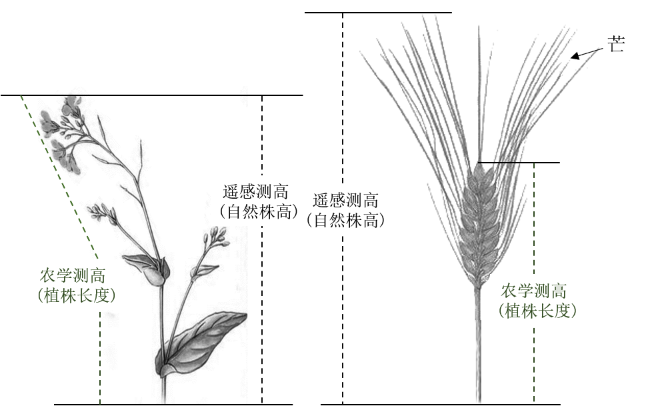

3.3 近地遥感测高流程与关键技术

3.3.1 地基激光雷达测高方法

3.3.2 无人机搭载可见光相机测高方法

4 近地遥感技术测高在农业中的应用

表1 基于近场遥感方式获取大田作物株高的应用研究Table 1 Application of near-field remote sensing method to obtain plant height of field crops |

| 应用 | 传感器 | 平台/测量高度 | 作物 | 模型 | RMSE | R 2 |

|---|---|---|---|---|---|---|

| 生物量估算 | 激光雷达[73] | 地面固定平台 | 小麦 | 幂函数回归模型 | 1.76 t/ha | 0.82 |

| 超声波[74] | 地面固定平台 | 生菜 | 指数回归模型 | —— | 0.80 | |

| 可见光相机[75] | 无人机/50 m | 小麦 | 偏最小二乘回归模型 | 0.96 t/ha | 0.74 | |

| 可见光相机[76] | 无人机/25 m | 水稻 | 随机森林 | 2.10 t/ha | 0.90 | |

| 可见光相机[77] | 无人机/44 m | 洋葱 | 作物体积模型 | 1.53 t/ha | 0.95 | |

| 倒伏监测 | 激光雷达[78] | 无人机/15 m | 玉米 | 通过株高变化定量测定倒伏程度,株高测量精度R 2=0.964,RMSE=0.127 m | ||

| 可见光相机[79] | 无人机/20~50 m | 玉米 | 通过设定阈值量化作物倒伏率,与地面实测值相比R 2=0.50,RMSE=0.09 | |||

| 可见光相机[80] | 无人机/35 m | 大麦 | 通过设定阈值量化作物倒伏率,与地面实测值相比,其最佳精度R 2=0.96,RMSE=0.08 | |||

| 产量预测 | 可见光相机[81] | 无人机/50 m | 玉米 | 多元回归模型 | 0.13 t/ha | 0.74 |

| 可见光相机[82] | 无人机/50 m | 甘蔗 | 作物模型 | 1.09 t/ha | 0.44 | |

| 可见光相机[83] | 无人机/50 m | 棉花 | 多元回归模型 | 0.16 t/ha | 0.94 | |

| 可见光相机[84] | 无人机/30 m | 大豆 | 偏最小二乘回归模型 | 0.42 t/ha | 0.81 | |

| 高光谱相机[63] | 无人机/50 m | 小麦 | 偏最小二乘回归模型 | 0.65 t/ha | 0.77 | |

| 辅助育种 | 可见光相机[85] | 无人机/30 m | 小麦 | 对株高性状进行全基因组和QTL标记,其预测的基因组值与实际值相关性在0.47~0.53之间 | ||

| 可见光相机[86] | 无人机/40~60 m | 玉米 | 通过对7个与株高相关的性状进行全基因组关联研究,共鉴定出68个QTL,其中35%的QTL与已被报道的控制株高性状的QTL重合 | |||

5 存在的问题与展望

5.1 测高精度与成本的平衡问题

表2 基于精度和成本角度总结不同数据完备性下的作物株高估算Table 2 Evaluation summary of accuracy and cost for crop height estimation |

| 类别 | 条件 | 精度 | 成本 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| DTM | GCP | 地面数据 | 冠层密度 | R² | RMSE | 人工成本 | 时间成本 | 操作成本 | |

| 1 | √ | √ | 稀疏/密集 | ★★★★★ | ★★★★ | ★★☆ | ★☆ | ★★ | |

| √ | 稀疏/密集 | ★★★★★ | ★★★★★ | ☆ | ☆ | ☆ | |||

| 2 | √ | 稀疏 | ★★★★★ | ★★★★ | ★★★☆ | ★★★★ | ★★★ | ||

| 密集 | ★☆ | / | ★★★☆ | ★★★★ | ★★★ | ||||

| √ | 稀疏 | ★★★★★ | ★★★★★ | ★☆ | ★★★ | ★☆ | |||

| √ | 密集 | ★☆ | ★★ | ★☆ | ★★★ | ★☆ | |||

| 3 | √ | 稀疏 | ★★ | / | ★★★★ | ★★☆ | ★★★★ | ||

| 密集 | ★★ | / | ★ | ★★ | ★★ | ||||

| √ | 稀疏 | ★★ | ★★★ | ★★★★ | ★★☆ | ★★★★ | |||

| √ | 密集 | ★★ | ★★★ | ★ | ★★ | ★★ | |||

| 4 | 稀疏 | ★ | / | ★★★★★ | ★★★★★ | ★★★★★ | |||

| 密集 | ★ | / | ★★★★★ | ★★★★★ | ★★★★★ | ||||

| √ | 稀疏 | ★ | ★☆ | ★★★ | ★★★★ | ★★★☆ | |||

| √ | 密集 | ★ | ★☆ | ★★★ | ★★★★ | ★★★☆ | |||

|

5.2 无人机遥感平台的精细测高问题

表3 无人机搭载激光雷达系统的测高研究Table 3 Studies on the height measurement of UAVs equipped with LiDAR system |

| 传感器 | 观测 对象 | FOV /(°) | 测距精度/cm | 重量/kg | 飞行速度/(m·s-1) | 测量高度/m | 点云 密度/(pts·m-2) | 精度 |

|---|---|---|---|---|---|---|---|---|

| Livox MID 40 | 林木[112] | 38.40 | 2.00 | 0.76 | 4.00 | 100.00 | 464.5 | R 2=0.96,RMSE=0.59 m |

| RIEGL VUX-1UAV | 玉米[78] | 330 | 0.50 | 3.50 | 3.00 | 15.00 | 112.0~570.0 | R 2=0.96,RMSE=0.13 m |

| 小麦[26] | 5.85 | 41.84 | 997.0 | R 2=0.78,RMSE=0.03 m | ||||

| 马铃薯[26] | 5.85 | 41.84 | 833.0 | R 2=0.50,RMSE=0.12 m | ||||

| 甜菜[26] | 5.85 | 41.84 | 933.0 | R 2=0.70,RMSE=0.07 m | ||||

| 玉米[113] | — | 150.00 | 420.0 | R 2=0.65,RMSE=0.24 m | ||||

| 大豆[113] | — | 150.00 | 420.0 | R 2=0.40,RMSE=0.09 m | ||||

| Velodyne VLP-16 | 棉花[4] | 360 | 3.00 | 0.83 | 2.00 | 9.00 | 1682.0 | RE=12.73%,RMSE=0.03 m |

| 大豆[114] | 0.50 | 9.00 | 1600.0 | RE=5.14% |

|