0 引 言

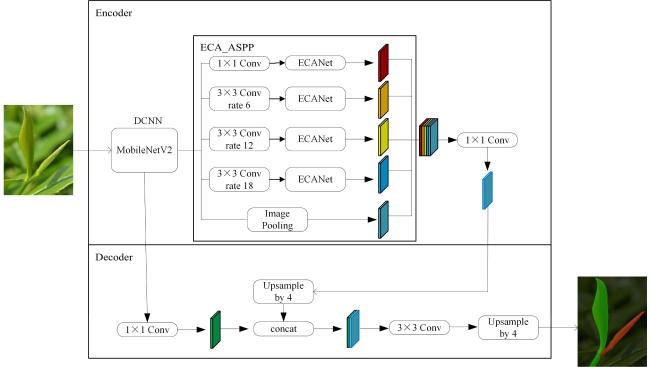

1 实验数据与网络结构

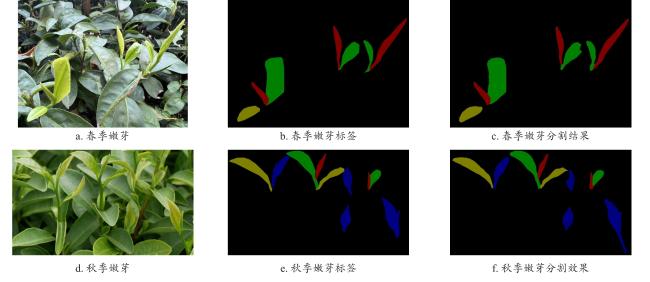

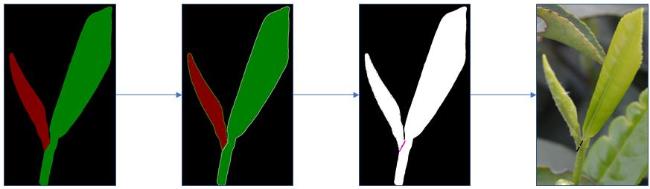

1.1 数据集构建

1.2 MobileNetV2网络

表1 MobileNetV2结构表Table 1 Structure table of MobileNetV2 |

| 特征输入尺寸 | 操作类型 | Bottleneck内部升维的倍数 | 通道数/个 | Bottleneck重复的次数/次 | 步长 |

|---|---|---|---|---|---|

| 2242×3 | conv2d | ‒ | 32 | 1 | 2 |

| 1122×32 | bottleneck | 1 | 16 | 1 | 1 |

| 1122×16 | bottleneck | 6 | 24 | 2 | 2 |

| 562×24 | bottleneck | 6 | 32 | 3 | 2 |

| 282×32 | bottleneck | 6 | 64 | 4 | 2 |

| 142×64 | bottleneck | 6 | 96 | 3 | 1 |

| 142×96 | bottleneck | 6 | 160 | 3 | 2 |

| 72×160 | bottleneck | 6 | 320 | 1 | 1 |

| 72×320 | conv2d 1×1 | ‒ | 1 280 | 1 | 1 |

| 72×1 280 | avgpool 7×7 | ‒ | ‒ | 1 | ‒ |

| 1×1×1 280 | conv2d 1×1 | ‒ | m | ‒ | ‒ |

|

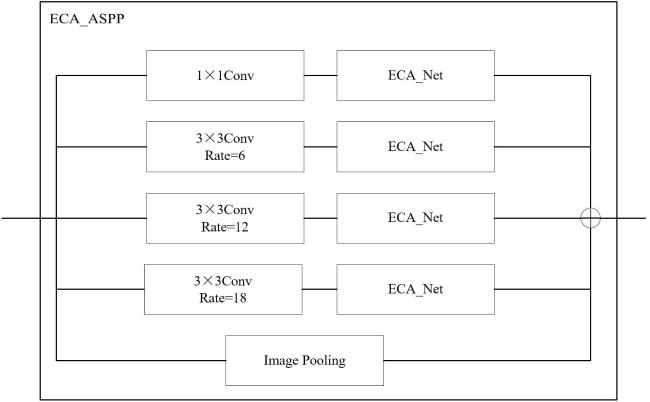

1.3 ECA_ASPP模块

1.4 改进的DeepLabV3+网络

2 实验结果与分析

2.1 实验环境及评价参数

表2 实验环境配置Table 2 Experimental environment configuration |

| 实验环境项目 | 配置 |

|---|---|

| 操作系统 | Windows 11操作系统 |

| 开发语言 | Python 3.11 |

| 深度学习框架 | Pytorch 1.7.1 |

| CPU | Intel® i5-13400f@2.5 GHz |

| GPU | NVIDIA RTX3060(12 GB) |

| 内存 | DDR4 32 G 4 000 MHz |

2.2 不同主干网络的精确度与计算量对比

表3 DeepLabV3+不同主干网络识别效果比Table 3 Comparison of recognition performance of different backbone networks in DeepLabV3+ |

| Backbone | MPA/% | MIoU/% | Recall/% | Parameters/M |

|---|---|---|---|---|

| Xception | 97.75 | 95.31 | 97.75 | 54.714 |

| ResNeXt | 94.14 | 90.21 | 94.14 | 103.589 |

| ResNet | 96.57 | 92.33 | 96.57 | 59.346 |

| MobileNetV2 | 97.00 | 93.01 | 97.00 | 5.818 |

表4 不同主干网络下茶叶嫩芽识别效果对比Table 4 Comparison of tea bud recognition effects under different backbone networks |

| Net | Tender_shoot | A_leaf | Two_leaves | Wrapped_bud |

|---|---|---|---|---|

| Xception | 0.93 | 0.96 | 0.91 | 0.98 |

| ResNeXt | 0.88 | 0.89 | 0.80 | 0.96 |

| ResNet | 0.90 | 0.94 | 0.84 | 0.96 |

| MobileNetV2 | 0.90 | 0.95 | 0.86 | 0.96 |

2.3 ECA_ASPP模块对模型准确率的影响

表5 ECA_ASPP模块识别效果对比Table 5 Comparison of ECA ASPP module recognition effects |

| Net | MPA/% | MIoU/% |

|---|---|---|

| DeepLabV3+ | 97.00 | 93.01 |

| DeepLabV3+ | 97.25 | 93.71 |

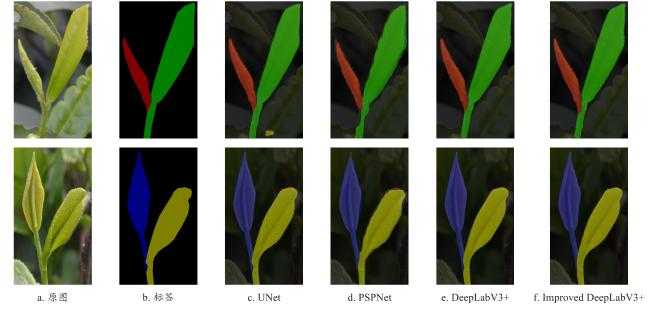

2.4 不同算法检测效果比较

表6 茶叶嫩芽识别研究不同网络结果对比Table 6 Comparison of different network results for tea sprout identification research |

| Net | MPA/% | MIoU/% | Recall/% | Time/s |

|---|---|---|---|---|

| UNet | 88.97 | 82.01 | 88.97 | 0.202 |

| PSPNet | 86.86 | 79.08 | 86.86 | 0.161 |

| DeepLabV3+ | 97.75 | 95.31 | 97.75 | 0.247 |

| Improved DeepLabV3+ | 97.25 | 93.71 | 96.85 | 0.165 |