0 引 言

1 材料与方法

1.1 数据采集与预处理

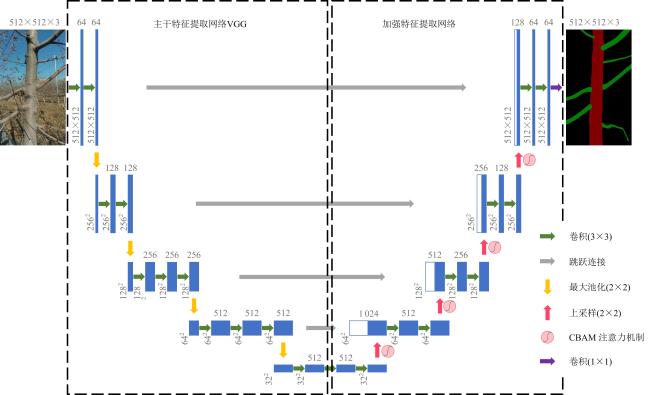

1.2 U-Net模型改进

表1 改进U-Net模型的训练主要参数Table 1 Improved U-Net model training main parameters |

| 参数名称 | 参数值 |

|---|---|

| 冻结epoch | 50 |

| 冻结步长 | 4 |

| 解冻epoch | 100 |

| 解冻步长 | 2 |

| 学习率 | 0.000 1 |

| 下采样倍速 | 16 |

| 学习率衰减类型 | Cos |

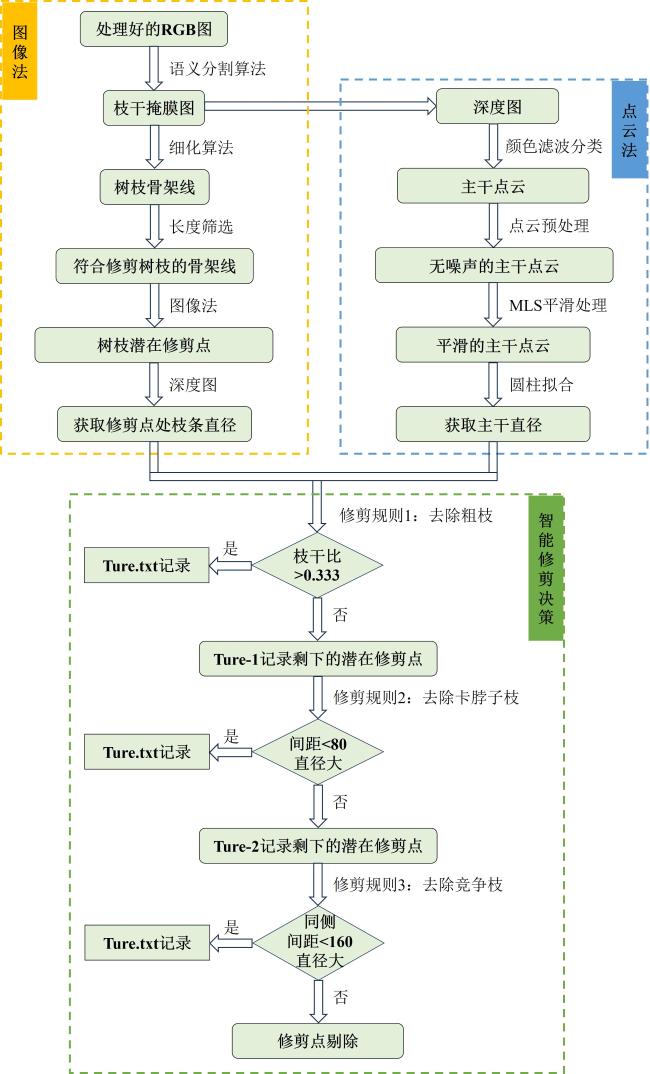

1.3 修剪点定位

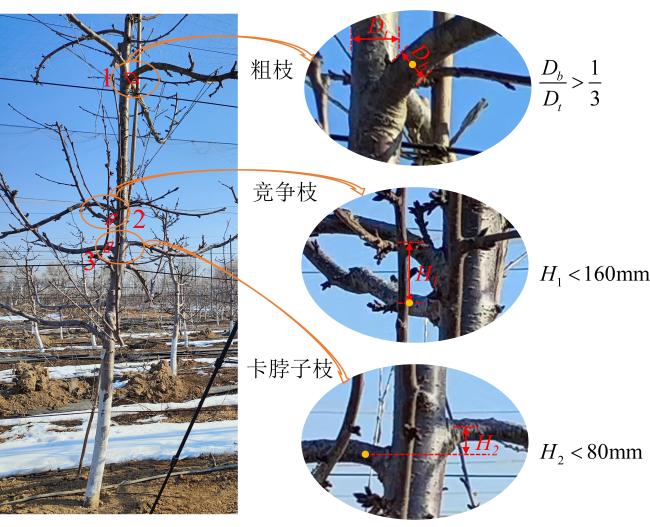

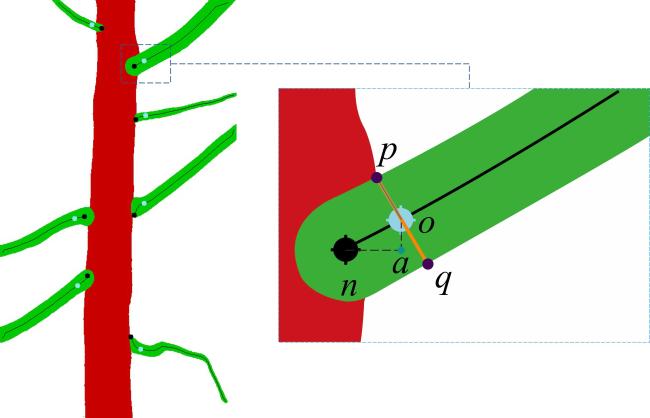

1.3.1 潜在修剪点定位

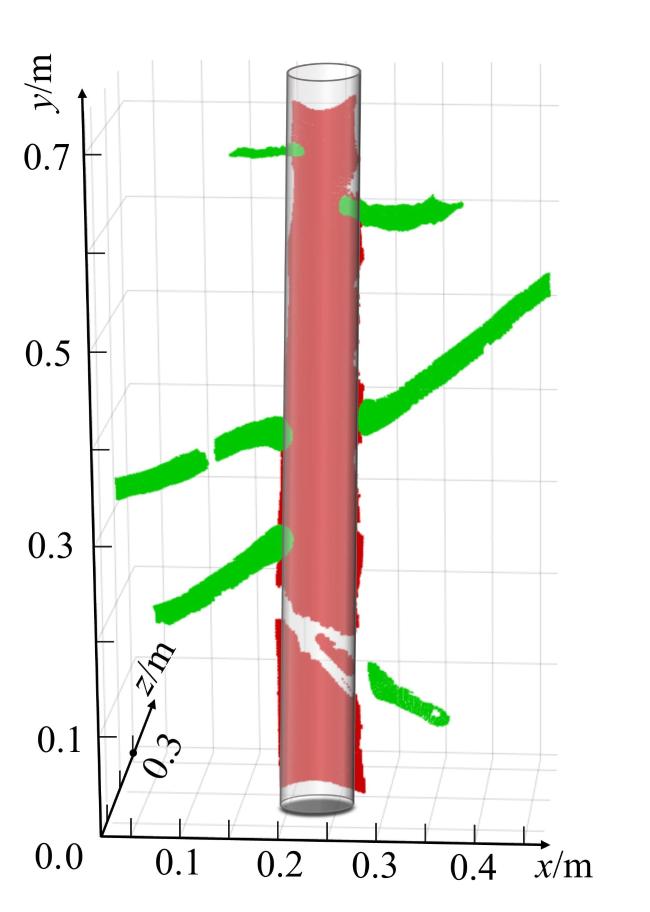

1.3.2 直径和间距估计

1.3.3 修剪点的最终获取

1.4 评价指标

2 结果与分析

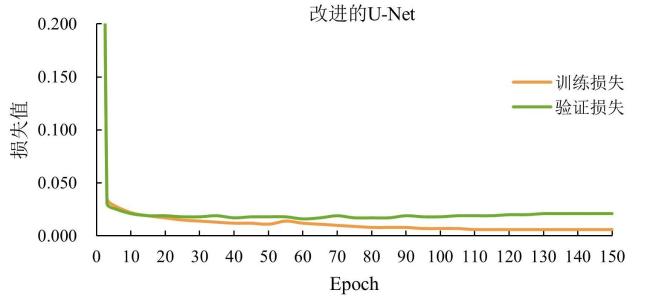

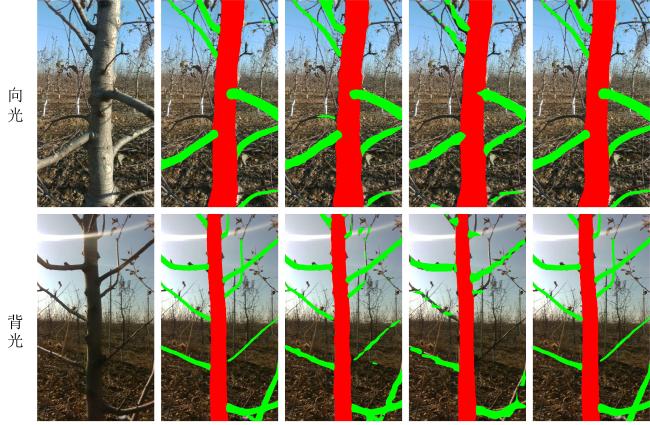

2.1 改进U-Net模型

表2 苹果树枝干分割研究4种模型的语义分割结果Table 2 Semantic segmentation results of four models for apple tree branch segmentation |

| 模型 | 特征提取网络 | mIoU/% | mPA/% | Precision/% |

|---|---|---|---|---|

| DeeplabV3+ | MobileNet V2 | 86.67 | 93.04 | 92.02 |

| Xception | 85.92 | 91.69 | 92.41 | |

| PSPNet | MobileNet V2 | 74.93 | 81.83 | 87.19 |

| Resnet50 | 79.89 | 85.98 | 90.19 | |

| U-Net | 未替换 | 88.46 | 92.78 | 93.14 |

| VGG16 | 91.50 | 94.30 | 95.58 | |

| Resnet50 | 90.10 | 93.46 | 94.77 | |

| 改进的U-Net | VGG16 | 91.67 | 95.52 | 95.55 |

表3 苹果树枝干分割研究改进的U-Net消融实验结果Table 3 Ablation study results of the improved U-Net for apple tree branch segmentation |

| 实验 | VGG16 | CBAM | mIoU/% | mPA/% | Precision/% |

|---|---|---|---|---|---|

| 1 | × | × | 88.46 | 92.78 | 93.14 |

| 2 | √ | × | 91.50 | 94.30 | 95.58 |

| 3 | × | √ | 89.39 | 93.66 | 93.87 |

| 4 | √ | √ | 91.67 | 95.52 | 95.55 |

|

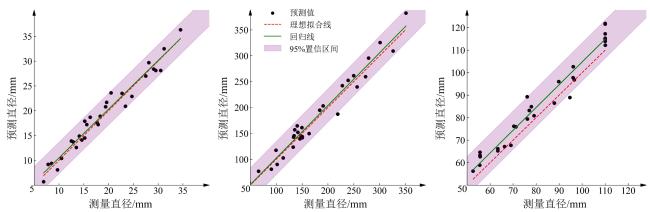

2.2 直径和间距试验统计

2.3 修剪点定位分析

表4 基于深度学习的图像和三维点云融合的苹果树修剪点识别结果Table 4 Recognition results of apple tree pruning points based on deep learning image and 3D point cloud fusion |

| 参数名称 | 参数值 |

|---|---|

| 图像数量/张 | 50 |

| 树枝个数/根 | 313 |

| 需要修剪的树枝/根 | 93 |

| 预测修剪的树枝/根 | 97 |

| 预测错的树枝/根 | 6 |

| 未预测到的树枝/根 | 2 |

| 预测对的树枝/根 | 87 |

| 成功率/% | 87.88 |